Explaining Algorithmic Systems to Everyday Users

Description

This project explores the relationship between instructor and student understanding of the artificial intelligence (AI) algorithms that underlay their educational technology, and the impact of that algorithmic understanding on decision-making for learning. The research will involve studies with people to investigate how algorithmic understanding impacts system trust and decision-making for learning, as well as the development of "explainables" or brief, engaging interactive tutoring systems to provide algorithmic understanding to classroom stakeholders. These two thrusts will yield a framework for designers of algorithmically enhanced learning environments to determine what level of algorithmic understanding is necessary to achieve the goals of informed decision-making by users of their systems. The explainables developed by this project will be publicly accessible and usable by external projects, increasing algorithmic understanding for the initially intended stakeholders, but also for the general public. The main contributions of this work include a methodologically rigorous investigation of the knowledge components of algorithmic understanding for learning contexts that can be applied to model interpretability discussions in the wider machine learning community.

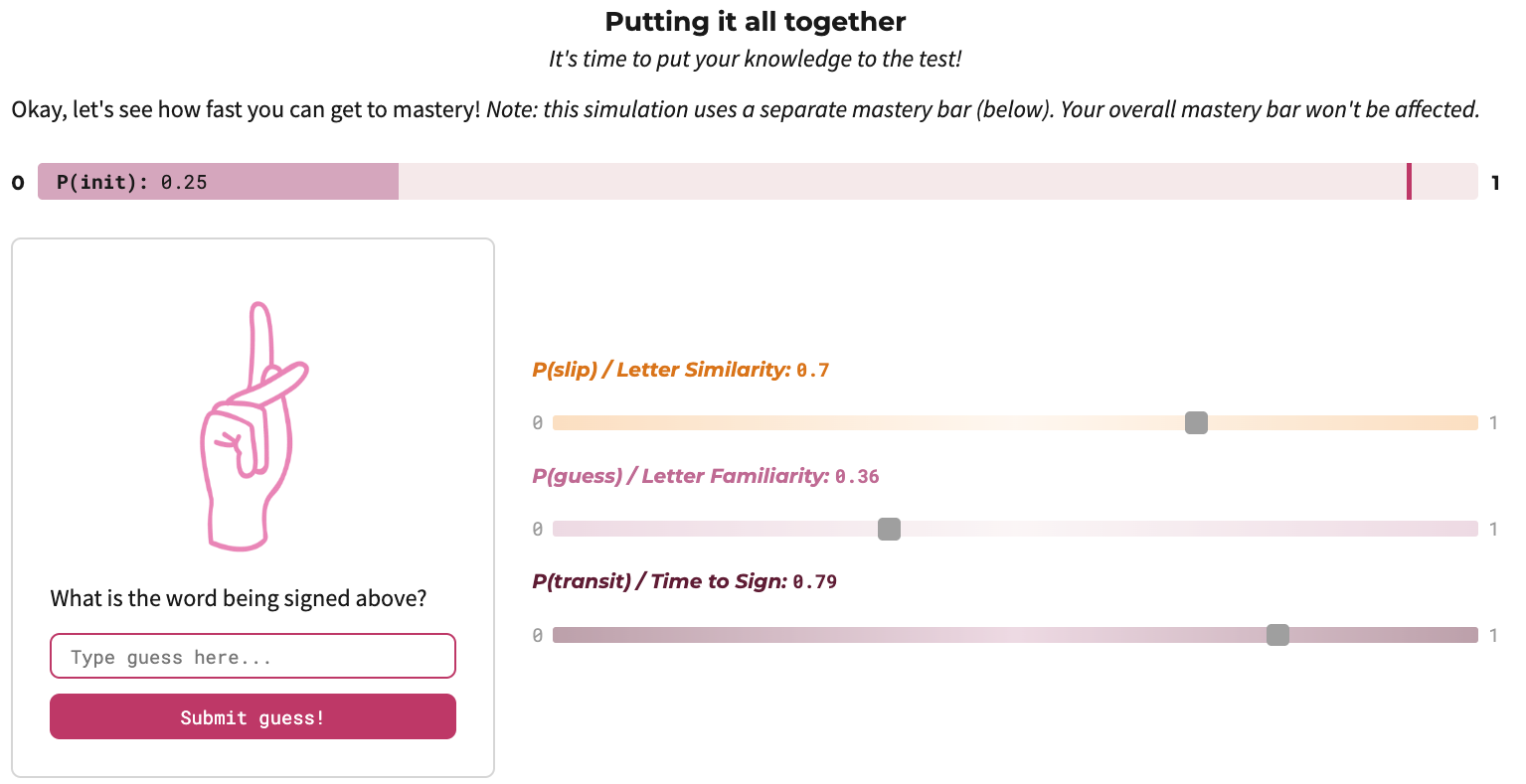

The research involves systematically identifying the concepts that qualify as "understanding" an AI algorithm, building brief interactive tutoring systems to target those concepts, and observing resultant changes in system trust and decision-making for learning contexts. It combines approaches from the learning sciences, human-computer interaction, ethics, and machine learning. Student researchers will perform cognitive task analyses to identify hierarchical models of expert comprehension of AI models, apply a user-centered design process to develop explainables to teach the varying levels of expert comprehension, and perform evaluation studies comparing various explainables' impact on algorithmic understanding, trust, and decision making. The results will add to ongoing discussions about ethical algorithmic transparency in the larger machine learning community, but also provide an actionable framework for developing a more AI-informed student and teacher body as well as lightweight explainables for appending to external algorithmically enhanced learning environments.

Documentation

Many of my research group's AI Explainables are available, here.

Sneirson, M., Chai, J., & Howley, I. (2024). A Learning Approach for Increasing AI Literacy Via XAI in Informal Settings. In Proceedings of the 25th Annual Conference on Artificial Intelligence in Education.

Yeh, C., Cowit, N., & Howley, I. (2023). Designing for Student Understanding of Learning Analytics Algorithms. In Proceedings of the 24th Annual Conference on Artificial Intelligence in Education.

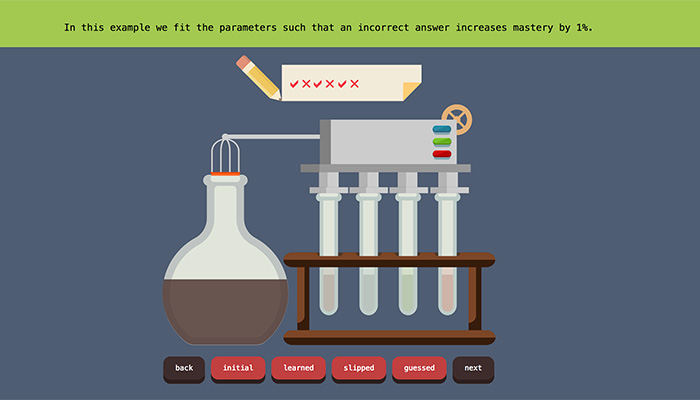

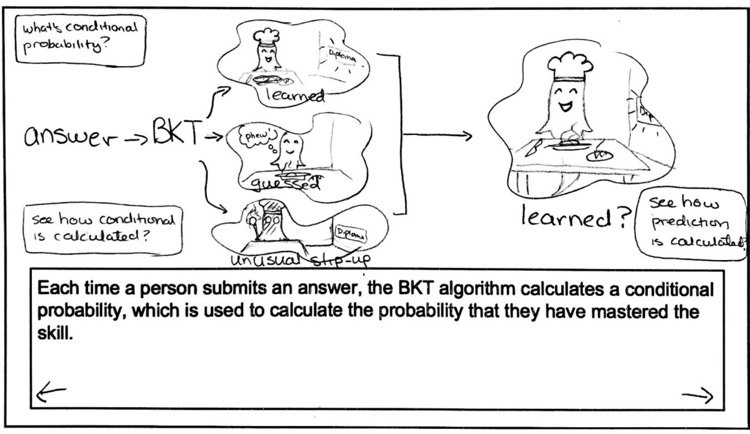

Zhou, T., Sheng, H., & Howley, I. (2020). Assessing Post-hoc Explainability of the BKT Algorithm. In Proceedings of the AAAI conference on Artificial Intelligence, Ethics, and Society.

A 2020 MIT Technology Review article covering some of our work: Why asking an AI to explain itself can make things worse.

Cowit, N., Yeh, C., & Howley, I. (2019). Tests, Memory, and Artificial Intelligence: How can we know what people know? In IEEE VIS Workshop on Visualization for AI Explainability.

Howley, I. (2019). NSF CRII: IIS: RUI: Understanding Learning Analytics Algorithms in Teacher and Student Decision-making.

Cho, Y., Mazzarella, G., Tejeda, K., Zhou, T., & Howley, I. (2018). What is Bayesian Knowledge Tracing? In IEEE VIS Workshop on Visualization for AI Explainability.

Howley, I. (2018). If an algorithm is openly accessible, and no one can understand it, is it actually open? In Artificial Intelligence in Education Workshop on Ethics in AIED 2018.