Let's Take a Test

(Don't worry, it isn't graded)

What is in the picture below?

Selected Answer:

The correct answer is Ĉielarko.

Did you get it right?

Probably not.

The answers were in Esperanto, an international universal language created in the 19th century. If you don’t know Esperanto, you probably just guessed.

Although not the best strategy, anybody who has taken a multiple choice test will know that just because you made a guess doesn’t mean you got the question wrong.

As there are 4 possible answers, we have a ¼ chance of a correct guess, or a probability of 0.25.

Let’s try one more:

What color is the flag?

(Hint: Look at the previous question)

Selected Answer:

The answer here is also Ĉielarko.

Did you get it right?

Probably!

There is still a guess probability of 0.25, so what happened?

You learned.

What If You Were a Computer?

Somebody inputs the correct answer.

How do we know for sure if they are guessing, or really “know” it?

Answer: We don’t.

We cannot know for sure. Bayesian Knowledge Tracing (BKT) Algorithms can help us predict what people know.

Bayesian Knowledge Tracing

One piece of information we do have is the guess probability, the probability of getting the answer correct on a guess. We will call this probability P(guess).

This is the first parameter of the Bayesian Knowledge Tracing Algorithm.

Do you remember the value of P(guess) from our questions? You can drag the slider to explore different options:

Number of Potential Answers:

P(guess):

Because there were 4 possible answers to each of the multiple choice questions, and only 1 was correct, P(guess) was equal to 0.25.

Let us consider the situation where a user inputs the correct answer to a multiple choice question. P(guess) alone cannot tell us whether the user knows the correct answer, or if it is a guess. P(guess) is just the probability of getting the correct answer with no prior knowledge.

What if we had information about the previous guess?

We answered two questions. We will call these questions N and N+1.

Was there a difference between the two questions?

Yes!

We came into the second question with prior knowledge about Esperanto. Specifically, we had learned the word Ĉielarko (rainbow). This allowed us to pick the correct answer. We call the probability that the user has prior knowledge P(known).

For the first question N, P(known) was likely 0 (0%).

For the second question N+1, P(known) was around 1 (100%).

P(learning)

P(learning) is the probability that a user will gain knowledge after a learning opportunity (after attempting a question N). In this case, P(learning) is also close to 1.

By answering the first question, the user is likely to gain mastery over the word Ĉielarko.

The longer an Esperanto word, the lower the probability of learning it [2]:

Number of Syllables in Word:

P(learning):

P(slip)

There is one other situation to consider. It is possible to know a match and still choose incorrectly, potentially due to rushing, a mispelled word, or misplaced click. This is called a “slip”. The probability of a slip P(slip) is the final parameter in the Bayesian Knowledge Tracing algorithm.

A high value of P(slip) (ex: P(slip)=.6)

indicates a user who knows the correct answer to a question is

more likely to get the question incorrect than correct. A low

value of P(slip) (ex: P(slip)=.02)

indicates that a user who knows the correct answer is almost certain to get

the question correct.

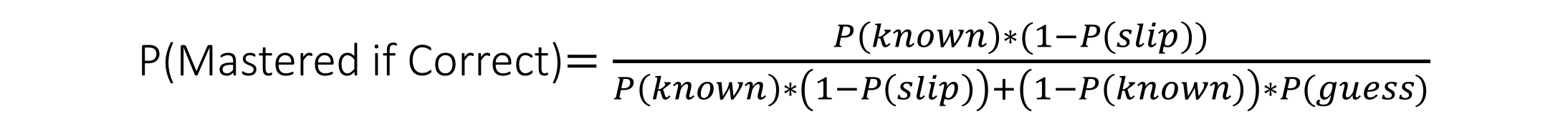

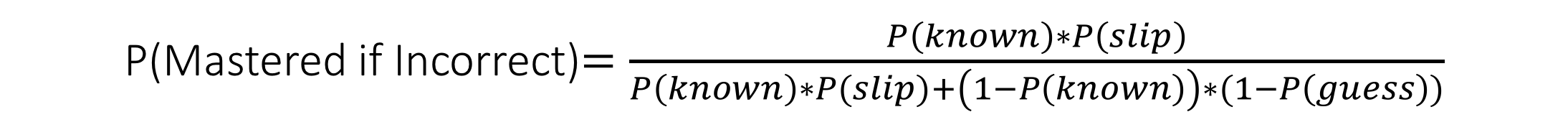

BKT: The Formula

Every time the user answers a question, our algorithm calculates P(Mastered),

the probability that the user has learned the skill they are working on, using the parameters discussed above.

The formula for P(Mastered) depends on whether the users' response was correct [1].

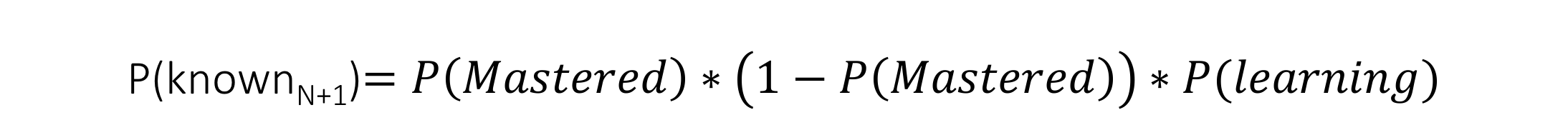

For the following question N+1, P(Mastered) is converted into the new value of P(known).

This is calculated using P(learning). P(Mastered) is the passed value of either P(Mastered if Correct)

or P(Mastered if Incorrect) [1].

Once P(known) ≥ 0.95, we say the user has achieved mastery.

Now that you’ve had a chance to learn about the four parameters, here’s a tool that can help you explore the relationships between them and how exactly each influences the probability calculations underlying BKT.

Memory Game

Bayesian Knowledge Tracing Algorithms can be understood through the card game Memory. In memory, a deck of cards is spread out, face down. The goal is to match cards that are the same. Click two cards. See if you find a match.

Remember, P(Mastered) depends on whether the student answers correctly. This probability becomes the new value for P(known).

Play the Memory game below. See if you can achieve mastery!

P(known):

P(Mastered if Correct):

P(Mastered if Incorrect):

Here are some prompts to develop a deeper intuition about BKT:

- Find a match. Click it again. What happens?

- P(learn) will continue to increase.

- For this memory game, our Bayesian Knowledge Tracing algorithm can be "tricked" into achieving mastery.

- What happens if P(guess) and P(slip) are set at 0.5?

- The P(Mastered) formula differs depending on whether a response is correct or incorrect. However, both formulas result in the same probability if both P(guess) and P(slip) = 0.5.

- P(learn) will be the same whether or not a match is found. Mastery no longer depends on correctness.

- What happens if P(guess) and/or P(slip) exceed 0.5?

- P(Mastered) is higher if the user answers incorrectly than if the user answers correctly. Getting two different cards will increase the level of mastery more than getting a match. This doesn't make any sense.

- BKT works best when P(guess) is below 0.3 and P(slip) is below 0.1 [1].